The lottery ticket hypothesis for gigantic pre-trained models

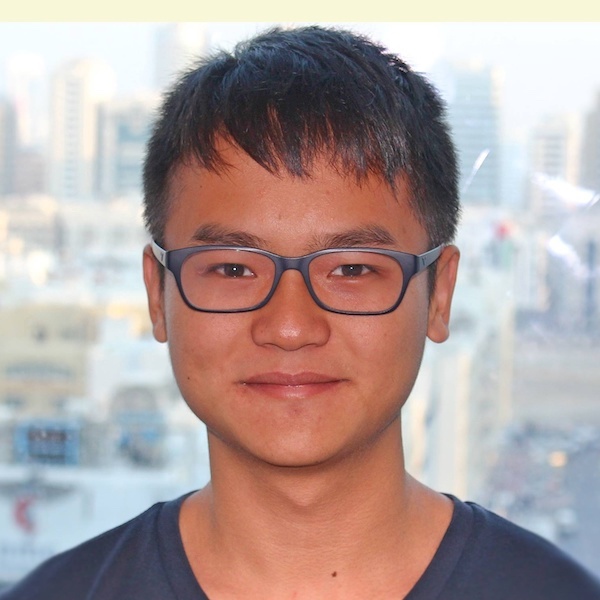

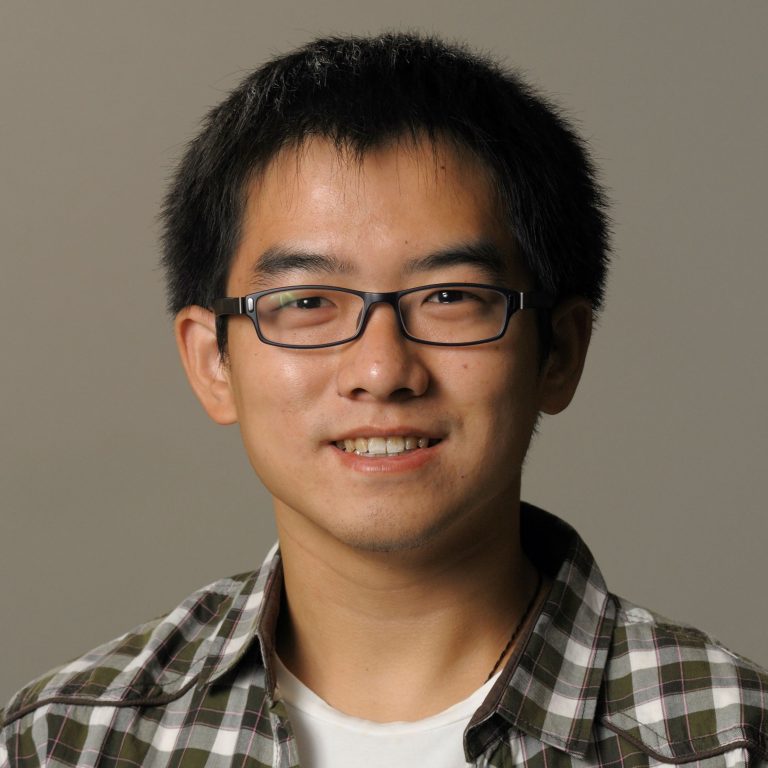

Prof. Atlas Wang

Professor Atlas Wang is currently an Assistant Professor of Electrical and Computer Engineering at UT Austin, leading the VITA research group (https://vita-group.github.io/). He is broadly interested in the fields of machine learning, computer vision, optimization, and their interdisciplinary applications. His latest interests focus on automated machine learning (AutoML), learning-based optimization, machine learning robustness, and efficient deep learning. He has received many research awards and scholarships, including most recently an ARO Young Investigator award, an IBM Faculty Research Award, an Amazon Research Award (AWS AI), an Adobe Data Science Research Award, and four research competition prizes from CVPR/ICCV/ECCV.

In NLP and computer vision, enormous pre-trained models have become the standard starting point for training on a range of downstream tasks. In parallel, work on the lottery ticket hypothesis has shown that models contain smaller matching subnetworks capable of training in isolation to full accuracy and transferring to other tasks. We have combined these observations to assess whether such trainable, transferrable subnetworks exist in various pre-trained models. Taking BERT as one example, for a range of downstream tasks, we indeed find matching subnetworks at 40% to 90% sparsity. We find these subnetworks at (pre-trained) initialization, a deviation from prior NLP research where they emerge only after some amount of training. As another example found in computer vision, from all pre-trained weights obtained by ImageNet classification, simCLR and MoCo, we are also consistently able to locate matching subnetworks at 59.04% to 96.48% sparsity that transfer to multiple downstream tasks, whose performance also see no degradation compared to using full pre-trained weights. As large-scale pre-training becomes an increasingly central paradigm in deep learning, our results demonstrate that the main lottery ticket observations remain relevant in this context. A project webpage is available at: https://tianlong-chen.github.io/Project_Page/index.html